Why AI in Hospitality Needs Systems, Not Tools

What Is Changing in Hospitality Right Now

Artificial intelligence is no longer a side experiment in hospitality. AI in hospitality is already shaping how travelers discover, evaluate, and choose hotels—often before they ever visit a website. The decision journey is increasingly mediated by AI-powered interfaces: conversational search, recommendation engines, and “answer engines” that aim to deliver a direct response instead of a list of links.

This matters because hotels are being “read” by machines. And machines don’t interpret your property the same way a human does. They rely on signals: consistency across sources, structured information, and reliable references. When your data is fragmented (policies in PDFs, amenities on OTAs, hours on Google, updates on the website), AI systems form an incomplete mental model of your hotel—and then confidently fill the gaps.

That is why hotel AI systems are no longer just automation tools. They’re becoming part of the hotel’s core infrastructure for trust, discoverability, and brand integrity.

Why AI in Hospitality Is Failing Today

Most AI deployments in hospitality disappoint for a simple reason: AI is introduced as a series of disconnected tools, not as a coherent system. The market is full of point solutions that generate content, respond to reviews, power chat, or summarize analytics. Each can look impressive in isolation. But hotels are not isolated tasks—they are interconnected operations.

When AI is layered onto an already fragmented stack, it often increases the burden on the hotel team. The AI generates outputs that must be verified. It answers confidently but without traceable sources. It produces content that is technically correct but brand-inconsistent. Instead of reducing complexity, AI becomes another stream of work.

This is why AI for hotel managers often feels risky rather than empowering: the system speaks on behalf of the hotel, but the manager cannot easily see why it said what it said—or whether it’s safe.

The Core Problem with Hotel AI Systems: No Authority

The deepest failure mode of hotel AI systems is not “model quality.” It’s a lack of authority. When AI doesn’t know what is official for a specific property, it guesses. Even retrieval-based approaches (like RAG) improve accuracy, but they don’t automatically solve the hardest hospitality problem: conflicting sources and unclear precedence. The foundational RAG work itself highlights provenance and updating knowledge as key challenges in knowledge-intensive generation.

Hotels are especially vulnerable because “truth” is spread across documents and channels created at different times by different people. If two sources disagree—an old brochure and an updated policy—the system needs a rule for which one wins. If a guest asks a nuanced question and the information isn’t verified, the system needs the discipline to stop.

Hallucination research consistently shows that LLMs can produce plausible but nonfactual content and that mitigation requires system-level design, not just better prompts.

The takeaway is simple: without a governed source of truth, AI cannot be trusted to represent a hotel.

A New Mental Model for AI in Hospitality: The Command Center

The solution isn’t “more AI.” It’s a better operating model.

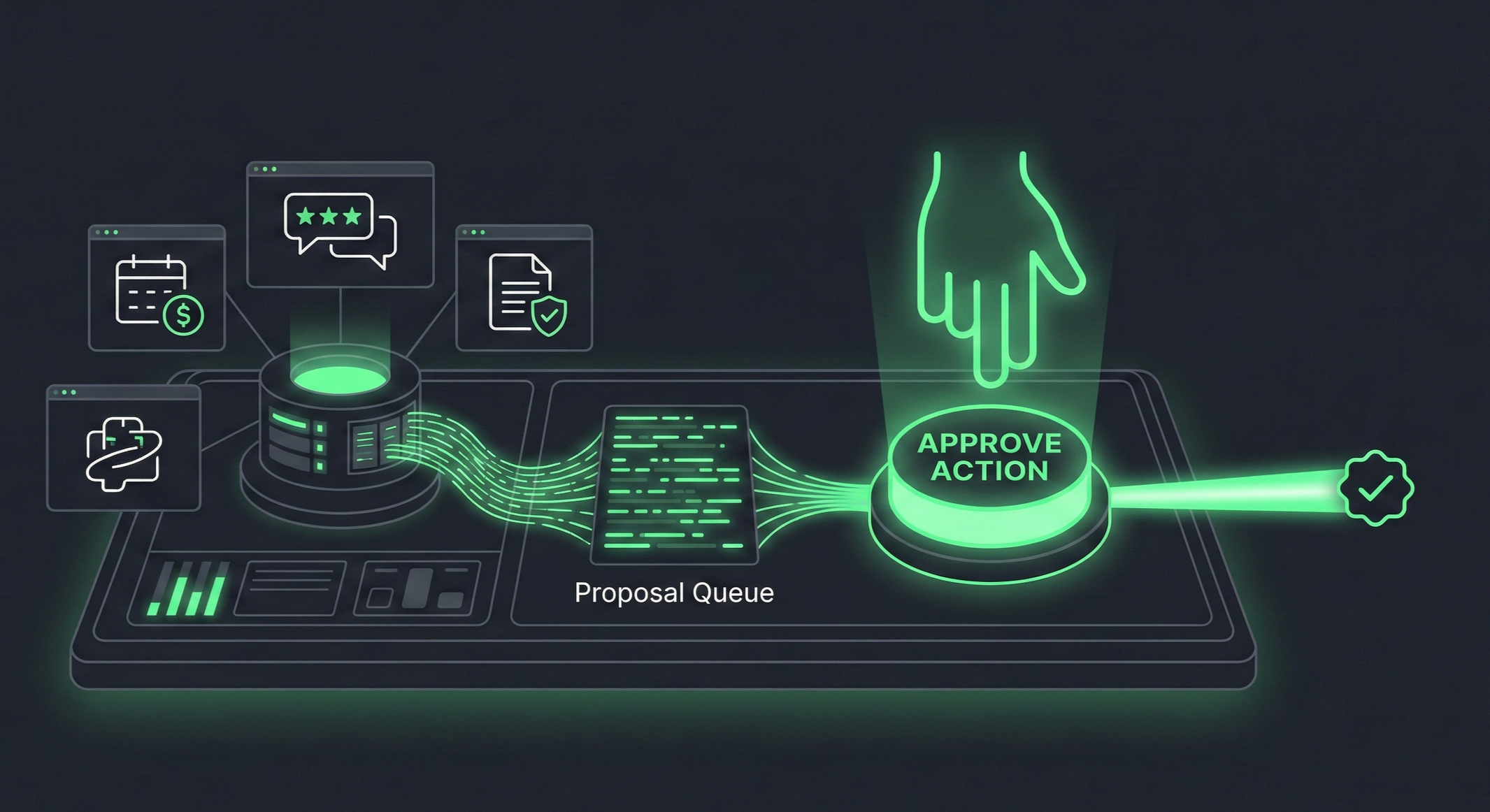

Instead of treating AI as a tool that produces answers, hotels should treat AI as a Command Center: a governed environment where specialized AI capabilities propose actions, and managers approve what goes live.

This matches how hotels already function. Hotels run on delegation, accountability, escalation paths, and standards. An AI system should be designed the same way: clear responsibilities, transparent reasoning, and safe execution pathways. The manager stays in control—not by doing everything manually, but by approving decisions that have already been prepared with evidence.

That is the core promise of AI for hotel managers: not autonomy, but confident delegation.

Why Single AI Assistants Do Not Scale in Hotels

Hospitality is multi-disciplinary by nature. Truth (policies, hours, rules), visibility (how the hotel appears to search and AI), brand (tone, positioning), and reputation (reviews and risks) are different domains requiring different forms of reasoning and different safeguards.

A single “AI assistant” tends to blur these domains. It can sound impressive, but it becomes unpredictable because it lacks boundaries. Modern hotel AI systems scale better when they use a multi-agent approach: separate responsibilities, explicit constraints, and clear handoffs.

This is less about hype and more about reliability. When an AI capability has one job, you can govern it, measure it, and trust it.

The Four Pillars of Trustworthy AI in Hospitality

1. A Truth Layer: Verified Hotel Knowledge

A trustworthy system begins with a Truth Layer: a governed knowledge foundation that defines what is authoritative for the hotel and makes it auditable. This is how you prevent AI from improvising policies. It is also how you enable “show your work” behavior—where any answer can point back to a source.

If the system cannot find verified information, the correct behavior is not to guess. It should say it doesn’t know and escalate the gap. That’s not a weakness; it’s professionalism in a high-trust industry. Hallucination surveys emphasize the risk of plausible nonfactual generation and the need for robust mitigation strategies—including in retrieval-augmented systems.

→ Deep dive: Why AI hallucinates in hotels—and how a Truth Layer fixes it

2. AI Visibility Intelligence: How Machines See Your Hotel

Hotels increasingly compete inside AI-generated answers. That makes visibility a machine-interpretation problem, not just a marketing problem.

This is where structured, consistent information becomes critical. Schema.org provides dedicated guidance for hotel markup, and Google explicitly recommends structured data that is machine readable for hotel pricing and related details—signals that help systems understand and validate hotel information.

Visibility intelligence in modern hotel AI systems answers practical questions: Are we represented consistently across sources? Are we discoverable for high-intent queries? Are we missing key data that prevents AI from recommending us?

→ Deep dive: AI in hospitality search: how hotels are chosen by machines

3. Brand-Governed Content at Scale

Hospitality brands are not just logos. They’re tone, promises, and consistency across touchpoints. Ungoverned AI content tends to drift toward generic language. Over time, that dilutes what makes a hotel distinct.

Brand-governed AI means the system drafts content within defined constraints (voice, claims allowed, style rules), and managers approve what ships. This turns AI into a high-throughput production assistant—without sacrificing identity.

→ Deep dive: Why most AI-generated content fails hotels—and how brand governance fixes it

4. Reputation as an Operational Signal

Reviews are not just public sentiment; they’re operational telemetry. A trustworthy hotel AI system can turn review text into structured signals: recurring irritants, sentiment trends, and risk flags. That enables prevention, not just apology.

This pillar also connects to governance: high-risk topics (safety, discrimination, legal threats) should trigger escalation and human handling by default—because reputation is fragile and response mistakes compound.

→ Deep dive: AI for hotel managers: human-in-the-loop is non-negotiable

Why Human-in-the-Loop Is Essential for Hotel Managers

Hospitality is high stakes. One incorrect claim, one tone-deaf response, or one inconsistent policy statement can create reputational damage that takes months to unwind. This is why human-in-the-loop AI is not optional in hospitality—it is the design pattern that makes AI safe.

This aligns with broader trustworthy-AI governance thinking. NIST’s AI Risk Management Framework frames AI risk as socio-technical and emphasizes lifecycle risk management rather than model-only performance. NIST’s Generative AI Profile extends this thinking specifically to generative systems and their risks.

A practical way to say it is:

The goal is not autonomous AI.

The goal is confident delegation.

What Hotel Leaders Should Focus on Now

If you’re adopting AI today, the strategic question is no longer “Which AI tool should we buy?” It’s “What do we need to govern so AI can represent us safely and consistently?”

Start with three fundamentals. First, define what documents and sources are authoritative and current. Second, make your hotel machine-readable where it matters (structured data, consistent facts). Third, enforce approval workflows for anything public-facing until trust is earned.

This is how AI for hotel managers becomes leverage rather than risk: AI prepares decisions, humans approve execution, and the system becomes more autonomous only as governance proves reliability.

AI in Hospitality Is Moving from Tools to Systems

The future of AI in hospitality will not be won by the hotel with the most AI features. It will be won by the hotel with the most reliable, governed, and machine-readable representation of itself.

Hotels do not need “smarter” AI.

They need hotel AI systems built for control, transparency, and trust—so the hotel is represented accurately everywhere AI speaks.

FAQ: AI in Hospitality

What is AI in hospitality?

AI in hospitality refers to the use of artificial intelligence to support hotel operations, guest experience, marketing, reputation management, and decision-making—especially as travelers increasingly rely on AI-powered answers and recommendations. (CXL)

How are hotel AI systems different from traditional hotel software?

Traditional hotel software manages records and workflows. Hotel AI systems interpret information, generate content, and propose actions. That requires governance (sources, constraints, approvals) to be safe and reliable. (NIST Publications)

Why is AI for hotel managers different from guest-facing AI?

AI for hotel managers must prioritize traceability, authority, and risk control. It supports decisions and operations, not just conversations.

What is human-in-the-loop AI in hospitality?

Human-in-the-loop AI ensures that AI-generated public actions—like policy answers, review responses, or content updates—are reviewed and approved by hotel staff before execution, reducing reputational and legal risk. (NIST Publications)

Insightful Resources (Worth Linking)

Retrieval-Augmented Generation (RAG) foundational paper. (arXiv)

Survey on hallucinations in large language models (taxonomy + mitigation). (arXiv)

NIST AI Risk Management Framework (trustworthy AI governance). (NIST Publications)

NIST Generative AI Profile (genAI-specific risks and controls). (NIST Publications)

Schema.org hotel markup guidance (machine-readable hospitality). (Schema.org)

Google hotel structured data reference (machine-readable price/fields). (Google for Developers)